Just something I wanted to document for myself as it is info I need on a regular basis and always have trouble finding it or at least finding the correct bits and pieces. I was more or less triggered by this excellent white paper that Herco van Brug wrote. I do want to invite everyone out there to comment. I will roll up every single useful comment into this article to make it a reference point for designing your storage layout based on performance indicators.

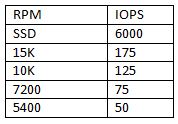

The basics are simple, RAID introduces a write penalty. The question of course is how many IOps do you need per volume and how many disks should this volume contain to meet the requirements? First, the disk types and the amount of IOps. Keep in mind I’ve tried to keep values on the safe side:

(I’ve added SSD with 6000 IOps as commented by Chad Sakac)

So how did I come up with these numbers? I bought a bunch of disks, measured the IOps several times, used several brands and calculated the average… well sort of. I looked it up on the internet and took 5 articles and calculated the average and rounded the outcome.

[edit]

Many asked about where these numbers came from. Like I said it’s an average of theoretical numbers. In the comments there’s link to a ZDNet article which I used as one of the sources. ZDNet explains what the maximum amount of IOps theoretically is for a disk. In short; It is based on “average seek time” and the half of the time a single rotation takes. These two values added up result in the time an average IO takes. There are 1000 miliseconds in every second so divide 1000 by this value and you have a theoretical maximum amount of IOps. Keep in mind though that this is based on “random” IO. With sequential IO these numbers will of course be different on a single drive.

[/edit]

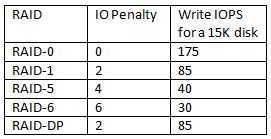

So what if I add these disks to a raid group:

For “read” IOps it’s simple, RAID Read IOps = Sum of all Single Disk IOps.

For “write” IOps it is slightly more complicated as there is a penalty introduced:

So how do we factor this penalty in? Well it’s simple for instance for RAID-5 for every single write there are 4 IO’s needed. That’s the penalty which is introduced when selecting a specific RAID type. This also means that although you think you have enough spindles in a single RAID Set you might not due to the introduced penalty and the amount of writes versus reads.

I found a formula and tweaked it a bit so that it fits our needs:

(TOTAL IOps × % READ)+ ((TOTAL IOps × % WRITE) ×RAID Penalty)

So for RAID-5 and for instance a VM which produces 1000 IOps and has 40% reads and 60% writes:

(1000 x 0.4) + ((1000 x 0.6) x 4) = 400 + 2400 = 2800 IO’s

The 1000 IOps this VM produces actually results in 2800 IO’s on the backend of the array, this makes you think doesn’t it?

Real life examples

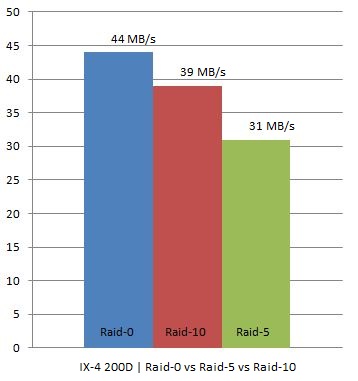

I have two IX4-200Ds at home which are capable of doing RAID-0, RAID-10 and RAID-5. As I was rebuilding my homelab I thought I would try to see what changing RAID levels would do on these homelab / s(m)b devices. Keep in mind this is by no means an extensive test. I used IOmeter with 100% Write(Sequential) and 100% Read(Sequential). Read was consistent at 111MB for every single RAID level. However for Write I/O this was clearly different, as expected. I did all tests 4 times to get an average and used a block size of 64KB as Gabes testing showedthis was the optimal setting for the IX4.

In other words, we are seeing what we were expecting to see. As you can see RAID-0 had an average throughput of 44MB/s, RAID-10 still managed to reach 39MB/s but RAID-5 dropped to 31MB/s which is roughly 21% less than RAID-10.

I hope I can do the “same” tests on one of the arrays or preferably both (EMC NS20 or NetApp FAS2050) we have in our lab in Frimley!

Blogger Comment

Facebook Comment